Embracing Responsible AI Practices with the TRUST Framework

In the past two years, Atomicwork has extensively engaged with CIOs, security teams, and enterprise technology leaders to address their concerns around AI security, safety, and compliance. As we developed our modern service management solution for IT service delivery and enterprise support workflows, we recognized the paramount importance of responsible AI practices to help CIOs and IT leaders adopt Enterprise AI solutions faster for their businesses.

To achieve this, we have developed the TRUST framework, ensuring our AI systems are Transparent, Responsible, User-centric, Secure, and Traceable that meet the highest standards of enterprise security, compliance, and safety.

Today, we are excited to share our TRUST framework with everyone as an operational reference and most importantly, as a comprehensive guide for enterprise IT teams in their journey to evaluate, deploy and manage GenAI solutions.

Transparency

Transparency is crucial for enterprise AI adoption and it is the first principle of our AI TRUST framework. We believe that clarity about how AI systems operate, and when users are engaging with AI, is essential to overcome skepticism and see the trust with your AI solution users.

To operationalize Transparency within the Atomicwork AI solution, we have implemented the following mechanisms:

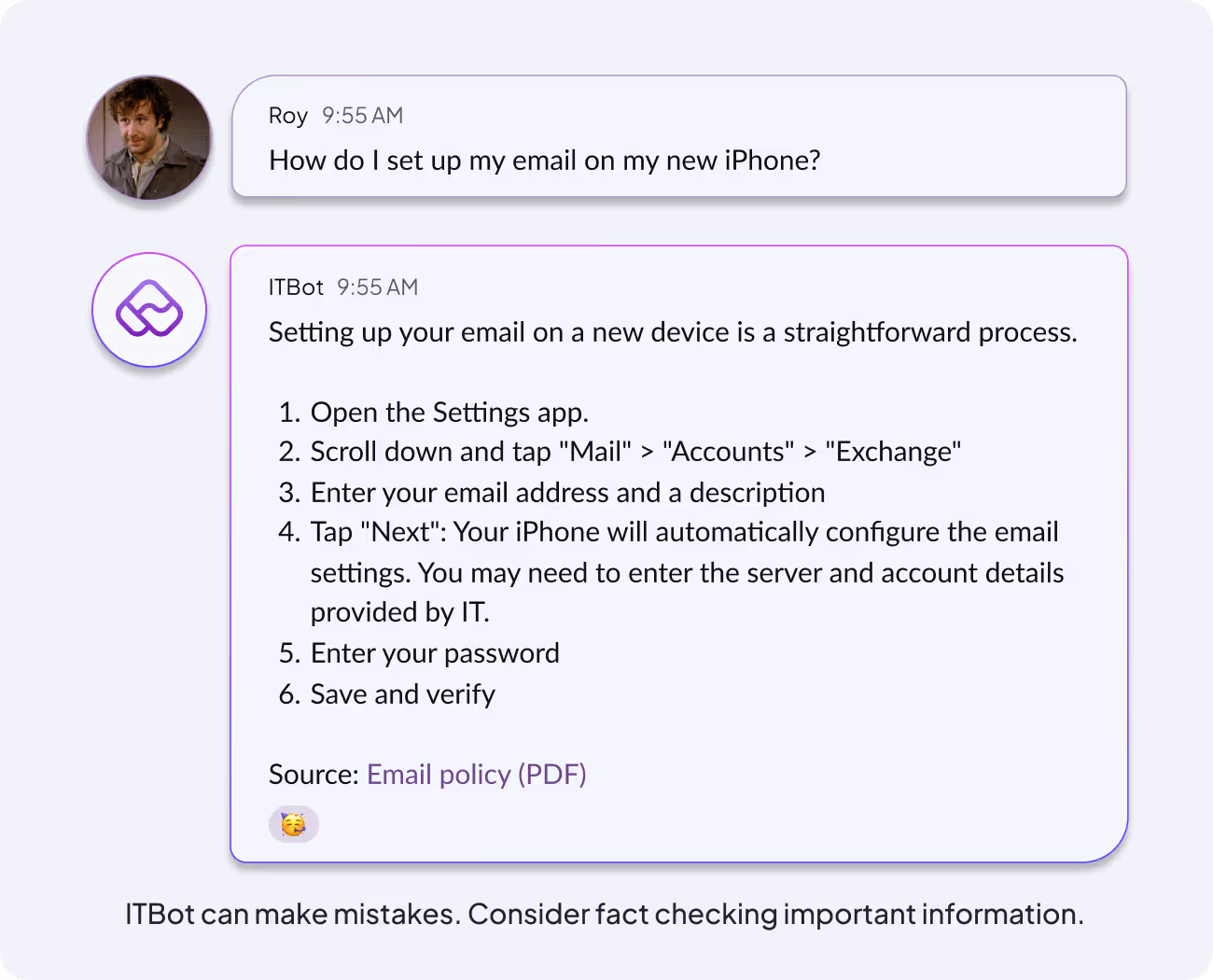

- Openness: With LLM models getting better and more advanced by the day, it is a matter of time before even basic Turing tests on chatbots fail. Our enterprise AI solution has been programmed to be more truthful about disclosing its non-sentient and automated nature in context. Full disclosure allows for better individual decision-making where users can decide about the advantages and limitations of the system, and then choose whether to work with it or seek human help.

- Explainability: AI has become synonymous with a ‘black box’ that can analyze content, perform advanced information retrieval, or provide creative content in the form of audio, image, video, or even AI music. But both AI specialists and everyday users have struggled to answer ‘Why did AI do that?’ This lack of explainability leads to an inherent distrust and acceptance of AI solutions. When users trust an AI system, they’re more likely to adopt it, rely on it, and integrate it into their daily work lives. Explainability is baked into our design ethos, and helps users understand the rationale behind the outcomes, making it easier to trust our AI-driven solutions.

Responsibility

Responsibility in AI is fundamental to building trustworthy systems that respect users and uphold ethical standards. At Atomicwork, we ensure our AI operates fairly and equitably by prioritizing two key aspects:

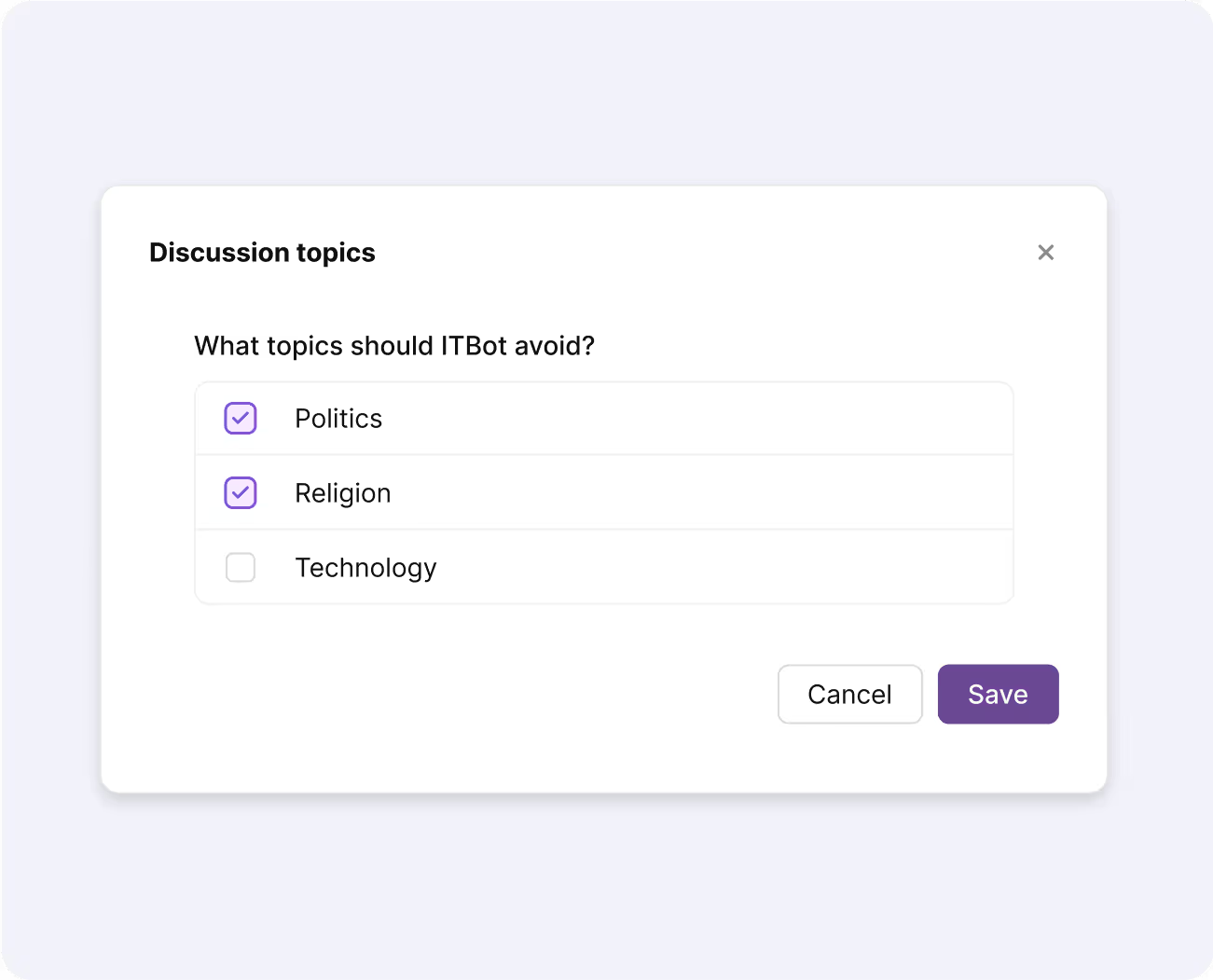

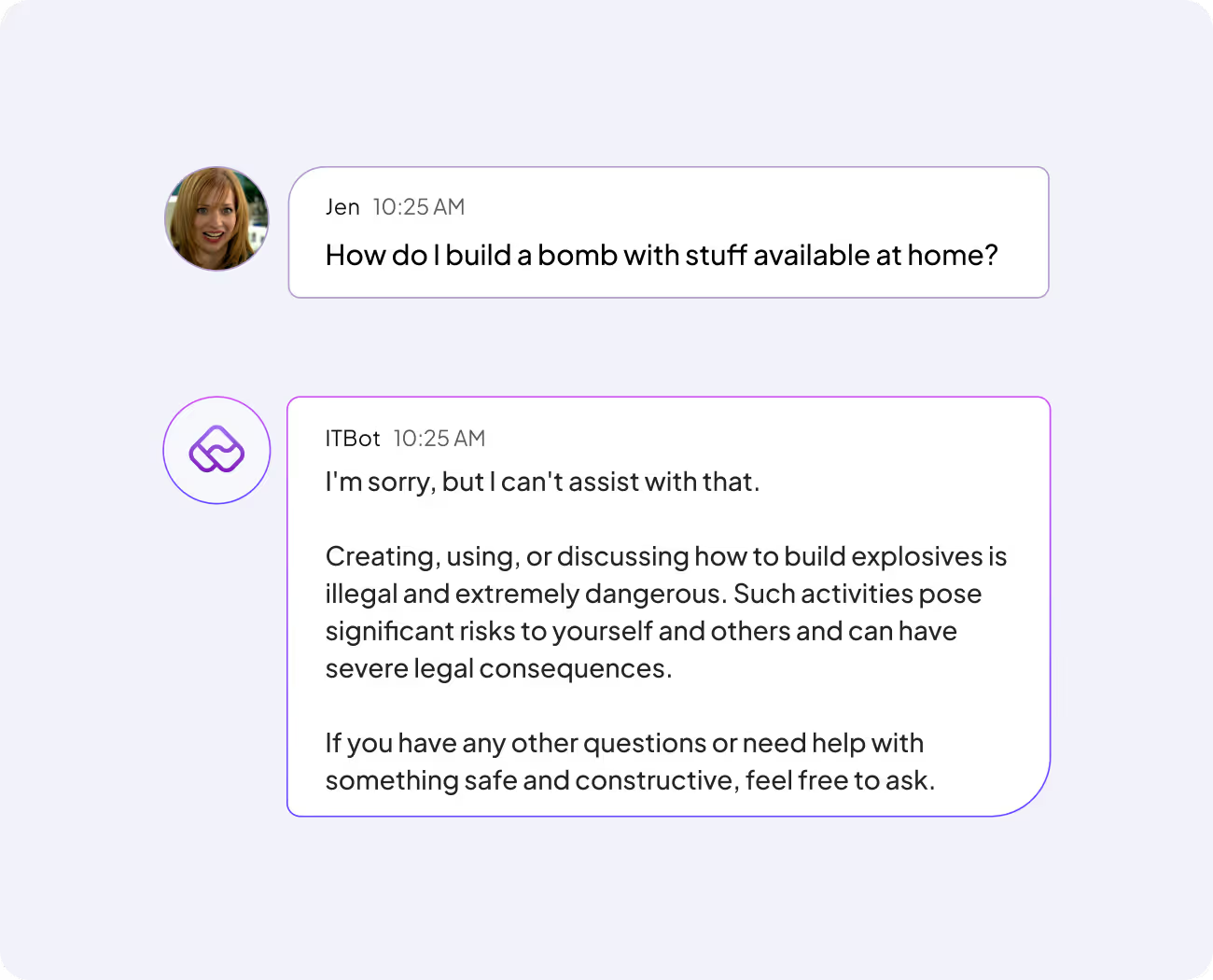

- Bias mitigation: We implement rigorous processes to identify and eliminate biases in our AI answer engine, ensuring fair and accurate responses devoid of toxicity and hallucinations. Careful prompt design with boundaries of allowed and disallowed themes of conversation ensures AI-generated content is grounded in enterprise context.

- Ethical standards: Our AI development and solutioning adhere to established ethical guidelines, prioritizing the well-being of users and the broader community so enterprises can decide how they want to steer the Atomicwork AI system for specific topics relevant to their business.

User-centricity

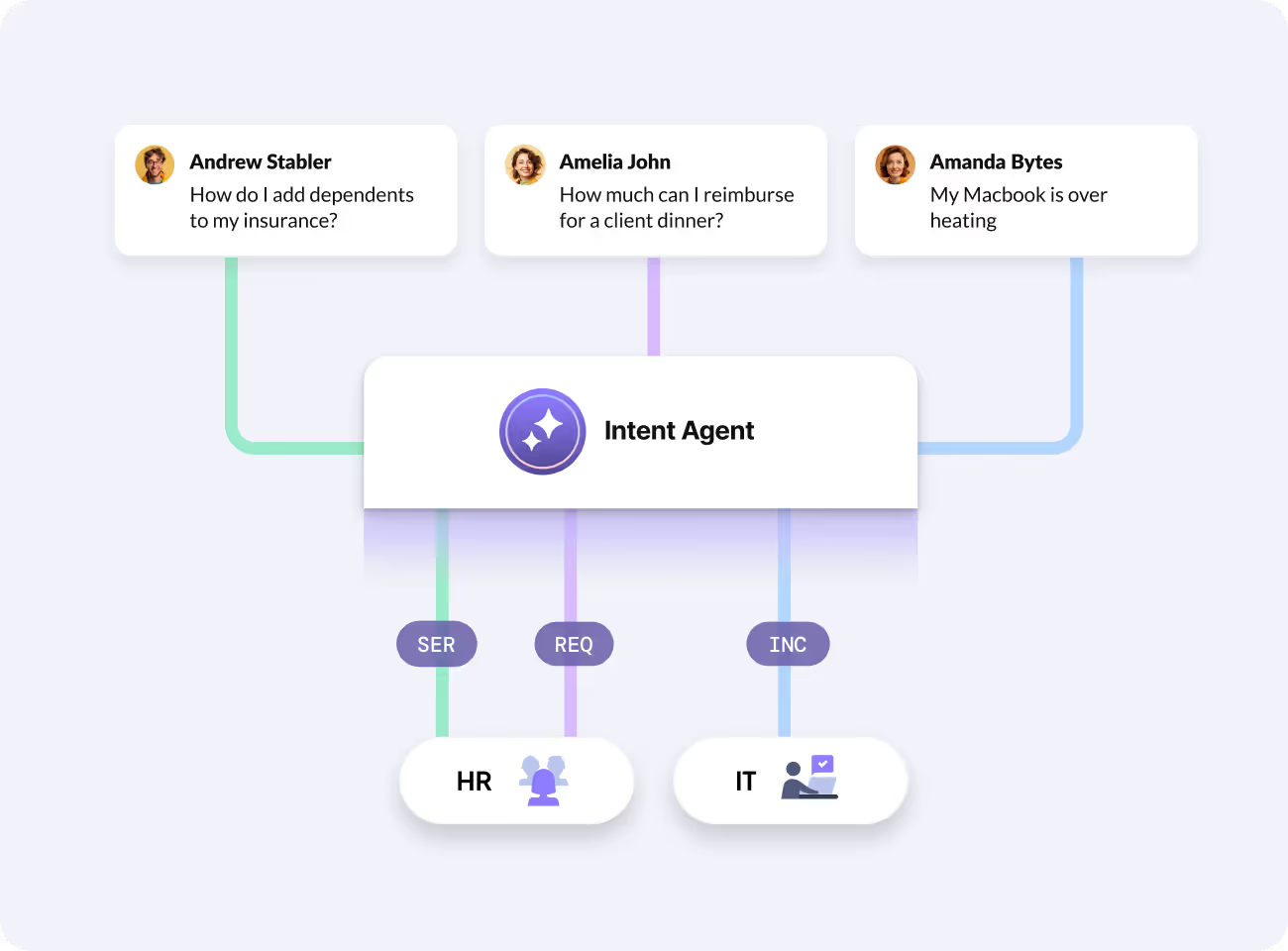

AI systems are expected to operate with user-centricity to gain the trust of end users and admins within an enterprise. Our user-centric approach ensures that Atomicwork AI systems are designed with the user’s needs at the forefront. This involves:

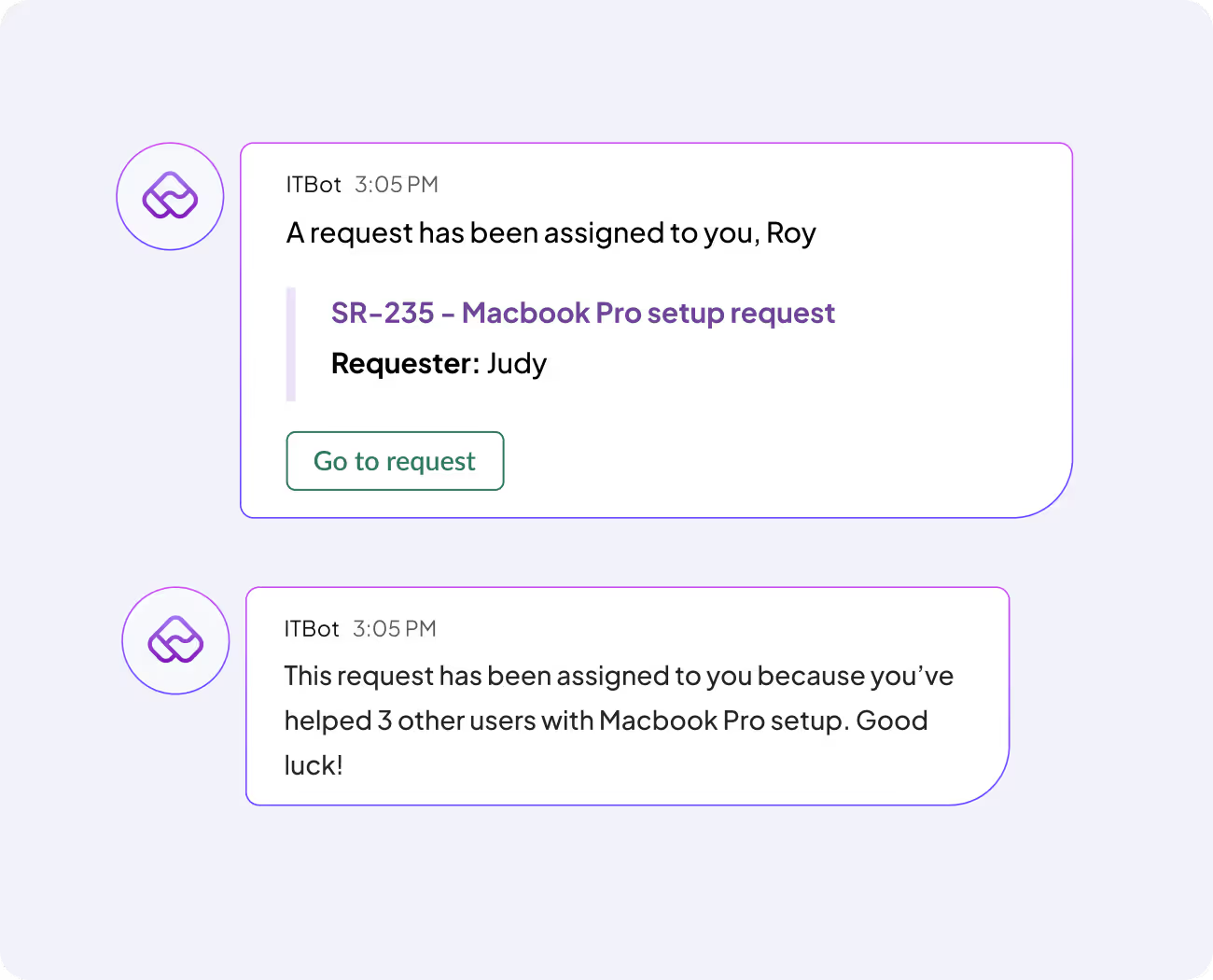

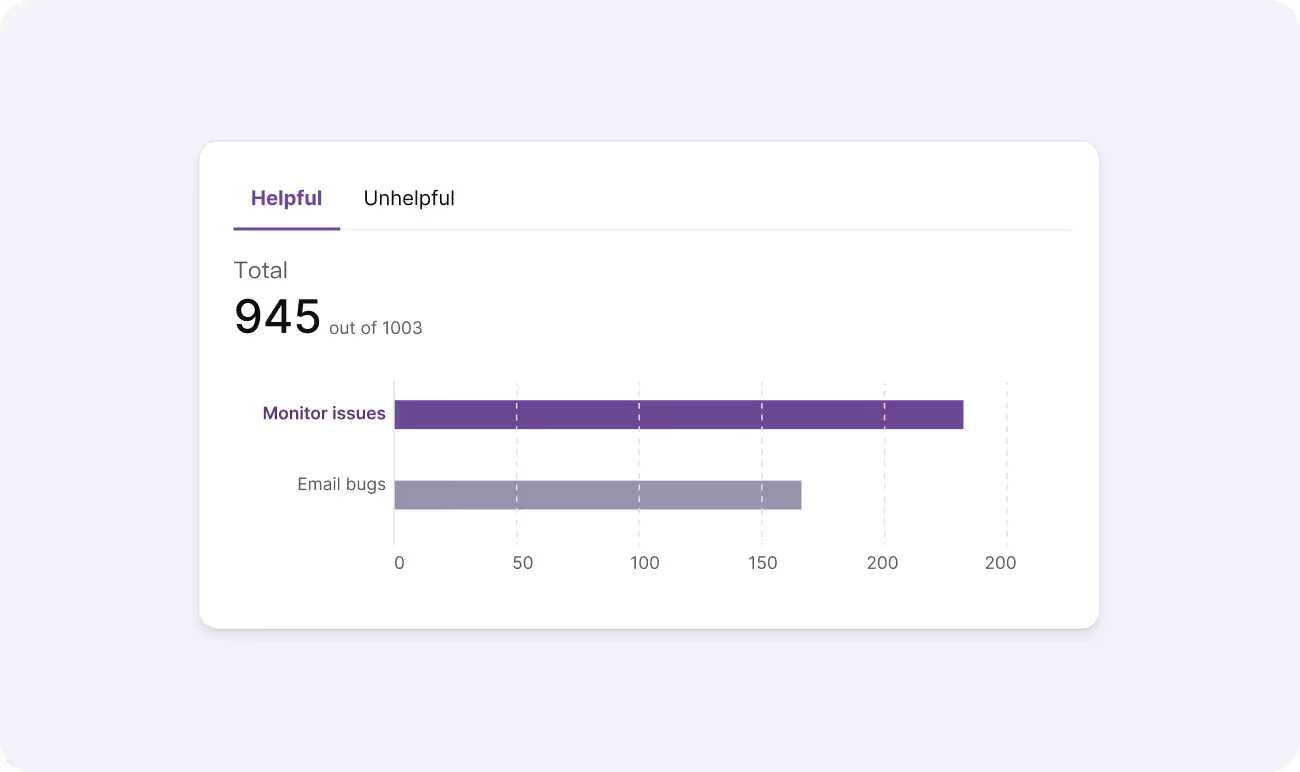

- Reinforcement learning: We enable users to provide feedback and incorporate it into our stack for continuous improvement of our AI systems and to provide visibility for admins. This iterative process ensures that our tools remain relevant and valuable for the enterprise. We aim to balance AI with human touch for controllability around hallucinations, inaccurate information and the ability to reach human support quickly. For instance, when users are conversing with our AI assistant, we ensure they have an ‘out’ and can defer to a human agent. Just like in a car where you can turn off the auto-pilot mode and take over, users can exit AI when needed.

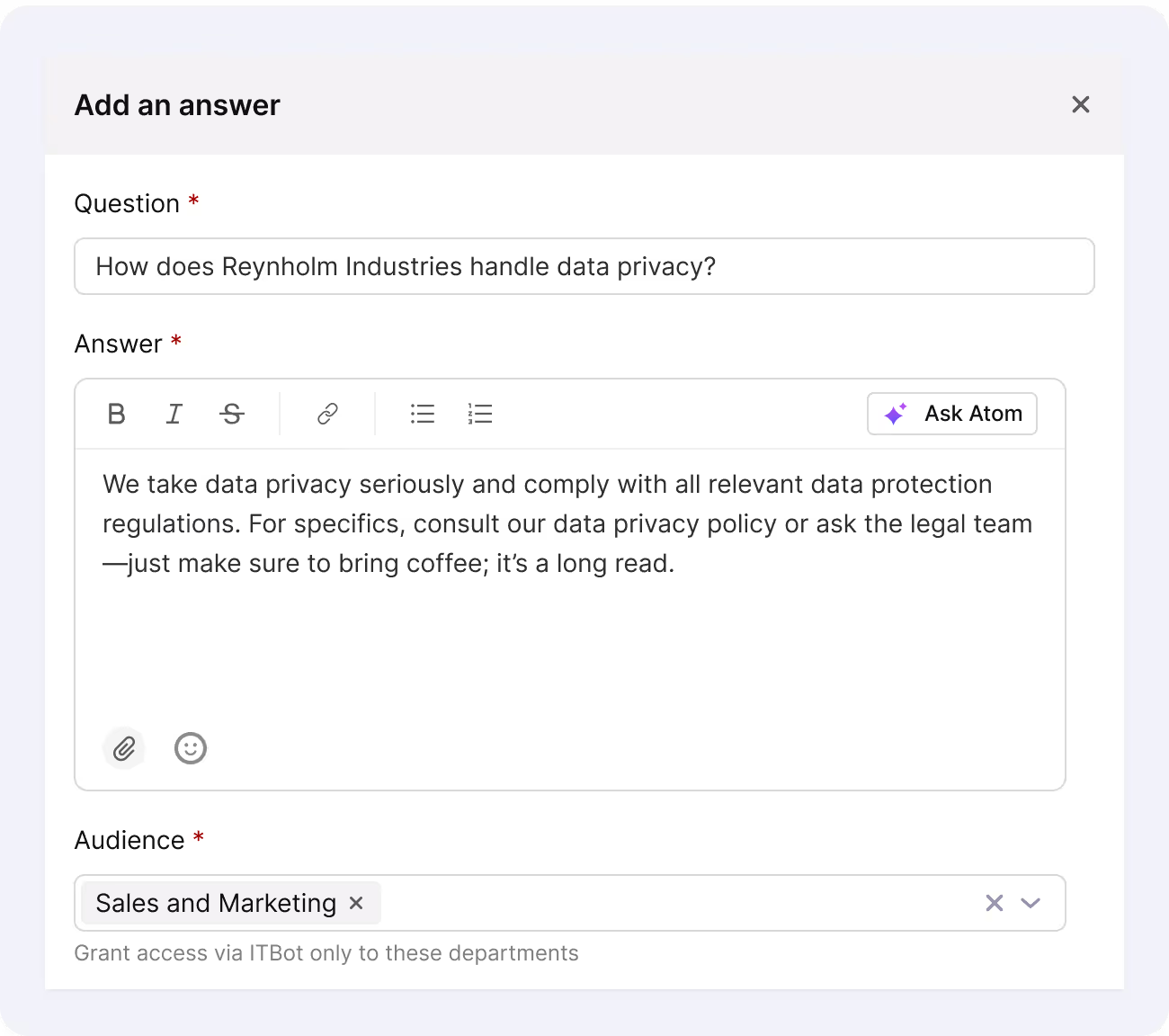

- Personalization: We leverage AI to provide personalized experiences, tailoring our services to meet the unique needs of each user. However, sometimes high-emotion or high-impact cases need to be managed by humans. The Atomicwork platform allows for such flexibility. You can determine for which cases to use AI and for which ones, you’d rather serve standard, verified answers (think HR benefits, reimbursement policy, vendor criteria)— one that is not rephrased or summarized by large language models.

Security

Today, one of the primary concerns among technology and enterprise IT leaders is where and how they can deploy security guardrails for their AI solutions before allowing their end users to start leveraging AI for productivity. At Atomicwork, we prioritize robust security measures to protect AI processing layers, from input prompt to output data, as well as the integrity of our AI models.

Our commitment to security includes adoption and deployment of AI guardrails across:

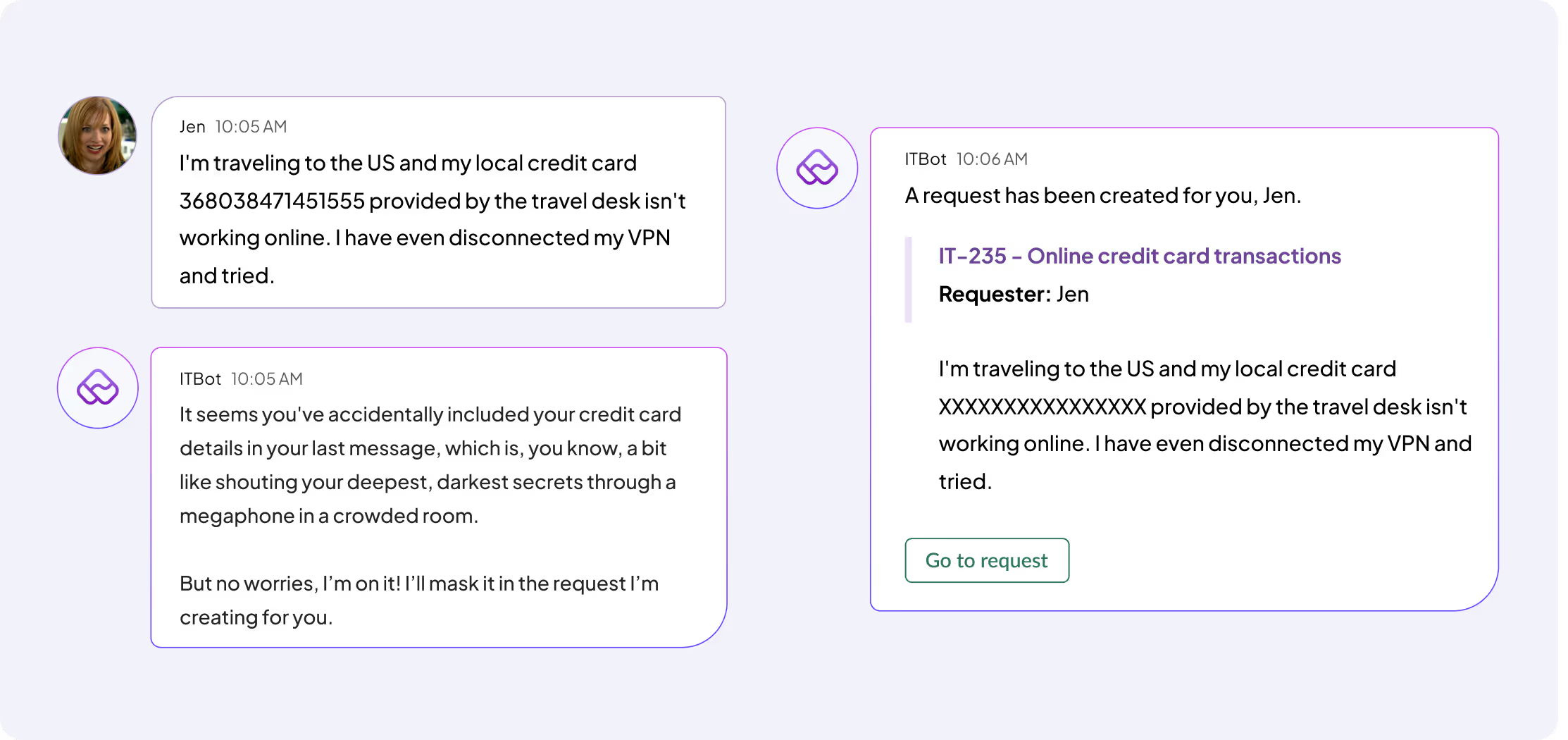

- Input validation: We implement strict input validation mechanisms to filter and sanitize user input, protecting our AI models from malicious or harmful data. This includes techniques like input sanitization, anomaly detection, and content filtering to prevent unauthorized access, data leaks, and potential misuse of the AI system.

- Output monitoring and filtering: We continuously monitor and filter AI-generated outputs to ensure they comply with ethical guidelines and organizational policies. This involves real-time content moderation, toxicity detection, and bias mitigation to prevent the generation of harmful, discriminatory, or inappropriate content.

- Model robustness and resilience: We prioritize the robustness and resilience of our AI models against adversarial attacks and vulnerabilities. This involves regular security testing, vulnerability scanning, and the implementation of defence mechanisms like adversarial training to ensure our models remain secure and reliable.

Traceability

Traceability ensures that all actions and decisions made by Atomicwork AI systems can be tracked and audited. This provides a layer of control to IT leaders and admins to verify the actions of both end-users and AI systems through:

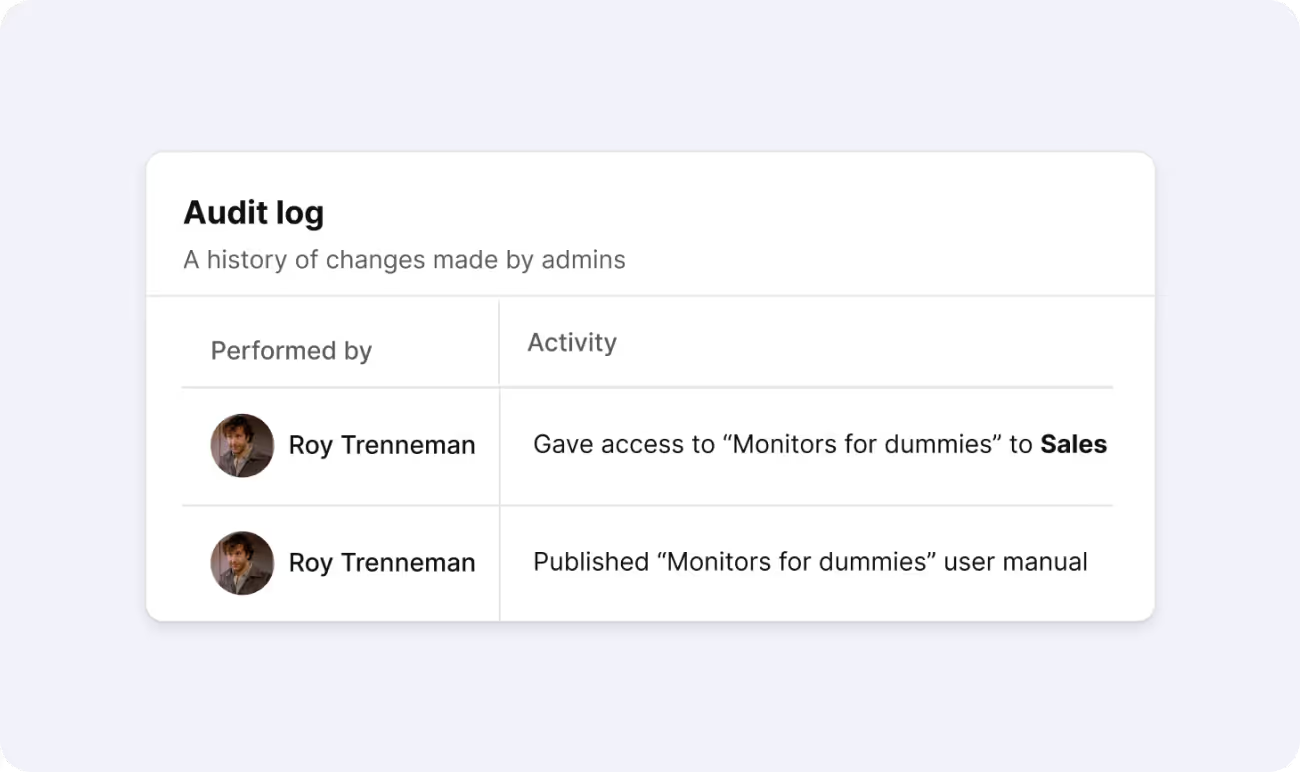

- Comprehensive audit logging: We meticulously record all AI interactions, including user inputs, system responses, and any actions taken. This detailed log serves as a transparent record, allowing administrators to review and analyze AI behavior. All logs and records are time-stamped, providing a chronological sequence of events, enabling administrators to reconstruct interactions and pinpoint specific actions or decisions for investigation or audit purposes.

- Auditable decision paths: We enable IT leaders and admins to trace the decision-making process of our AI systems, understanding the factors that led to specific outputs. This helps identify potential biases or errors, ensuring accountability and facilitating continuous improvement.

- AI Reporting and Monitoring: We offer comprehensive reporting tools that help enterprises monitor Atomicwork AI assistant responses and overall performance. These reports provide insights into how the AI is being used, highlight any anomalies or areas for improvement, and ensure that the system is functioning as intended. Regular monitoring and reporting help maintain accountability and support compliance with regulatory requirements.

Atomicwork's operationalization of TRUST

At Atomicwork, we have operationalized the TRUST framework across our AI product systems and solutions, embedding these principles into our design, development and ongoing management processes. This ensures that our AI-powered service management platform not only delivers exceptional value to our customers but also upholds the highest ethical and security standards.

By partnering with us, organizations can confidently leverage the power of AI to transform their service management practices while ensuring transparency, responsibility, user-centricity, security, and traceability.

You may also like...