Enterprise IT’s new TCP/IP Moment: Why MCP changes everything for AI integration

This blog is co-authored by Shamith Rao, Backend & AI engineer at Atomicwork, and Gautham Menon, Product Lead at Atomicwork.

The past couple of weeks have been monumental for the Model Context Protocol (MCP). As I've watched the rapid developments unfold, I'm increasingly convinced that MCP is becoming the TCP/IP of the AI era.

My excitement stems from seeing major players like Microsoft integrate MCP with Azure OpenAI and AWS integrating it with its AI offerings, enabling AI services to interact seamlessly with external systems.

OpenAI's announcement of MCP support across their products and SDKs further validates this direction. Meanwhile, Cloudflare's launch of remote MCP servers with robust authentication features opens new possibilities for secure MCP deployments.

For Atomicwork and other AI startups, this convergence around a single protocol represents a transformative opportunity. MCP is solving one of our biggest challenges: creating standardized ways for AI models to integrate and access the systems where data lives without requiring custom integrations for managing context-aware communication.

Why MCP matters a lot for CIOs and technology leaders

Today, AI integration is one of the biggest headaches for enterprise IT. I've spoken with dozens of CIOs who share the same frustration: AI adoption is accelerating rapidly within their organizations, but the integration landscape remains painfully fragmented. From SaaS Apps to AI Agents, the proliferation is real.

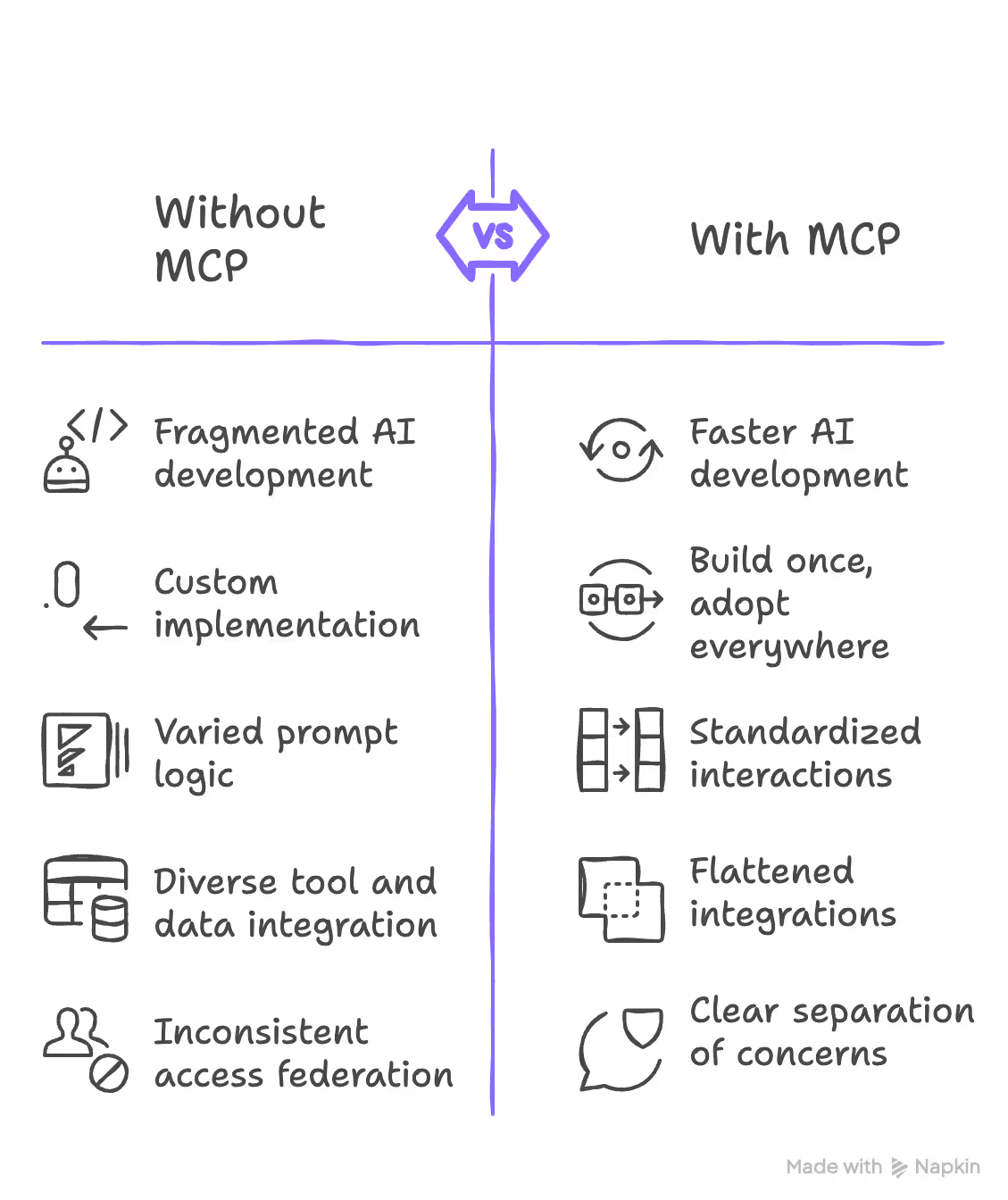

Enterprise IT teams have already built impressive capabilities where LLMs can query knowledge bases or trigger workflows, but these systems still operate in silos. Each system maintains its own authentication methods, data models, and processes, creating what engineers call the ‘MxN problem"—the need for custom integration work each time a new AI application needs to connect with a data source.

The result? IT teams buckle under integration fatigue, brittle orchestration chains, data driven API dependency without context-aware communication and security workarounds.

Enterprise teams then spend more time maintaining AI integrations than building new ones. What's missing is an AI-native protocol that handles communication context continuity across AI services and systems.

Let’s breakdown what this ‘context’ across systems in terms of roles, permissions, or previous interactions truly is.

Assume there’s a large financial firm that stores sensitive client data, financial reports, and internal documentation in a centralized knowledge base. They implement an AI-powered assistant to retrieve relevant information by different users like junior analysts, senior managers, compliance officers, etc. Context here is being:

- Role-aware: Based on an employee’s role, the system dynamically exposes different document resources. A junior analyst may only have access to basic reports and data, while a senior manager may access comprehensive financial summaries and high-level executive reports.

- Permission-aware: Based on the permissions given, only certain actions should be allowed. A senior manager can request for a summary of a financial report and AI assistant may be able to generate new reports, while a junior analyst might only be allowed to view data without any modification.

The role-aware and permission-aware context persistence across AI services and AI agents is key for rapid adoption within the enterprise.

The Model Context Protocol, proposed by Anthropic, allows AI services to understand and remember ongoing tasks, regardless of which systems they need to access.

As Mahesh Murag of Anthropic shares in his latest workshop on building AI agents with MCP shares, MCP solves this by flattening that MxN problem and enabling applications (like AI assistants and chatbots) to connect with the MCP-compatible servers in a standardized manner.

So, in the above example, if the AI assistant is configured as an MCP client, it can use tools and resources connected with MCP to fetch and deliver relevant information that is permissible based on the user’s role.

By using an AI service that works with MCP it becomes easy to consistently enforce access control with context during interactions, maintaining the continuity of role and permission-aware context across systems.

What MCP solves: A shared protocol for AI integration

MCP is a shared protocol (client-server architecture) that allows AI models to carry and maintain context across interactions and different systems.

In simple terms, MCP enables different agentic systems to talk to each other, share context, and work together seamlessly. The protocol makes context portable, persistent, and structured across your entire technology ecosystem.

Here’s an example that Mahesh shares from the recent Anthropic workshop cited above to illustrate the role of MCPs.

Without MCP: A developer wants an AI to help research quantum computing's impact on cybersecurity. The developer must build custom integrations to search engines, web browsers, file systems, and more. Each integration requires its authentication, error handling, and data formatting logic. If the developer later wants to add fact-checking capabilities, they'd need to build yet another set of custom integrations.

With MCP: The developer defines a research agent, gives it access to pre-built MCP servers (for web search, fetching content, and file system access), and specifies the task. The agent can plan its work, use these standardized tools without custom code, and add necessary capabilities. When the developer wants to add fact-checking, they just connect the existing MCP servers to a new fact-checking agent; no new integration work needed.

MCP achieves this through three primary interfaces that form the core of the protocol:

1. Tools: These model-controlled capabilities give AI models direct agency to take actions. The MCP server exposes tools to the client application, and the AI model within the client decides when to invoke them. These can be read tools to retrieve data, write tools to send data to applications, update databases, or even write files to your local system.

2. Resources: These are application-controlled data elements that enrich interactions. A server can expose resources like images, text files, or structured JSON data to the client application. These can be static files or dynamic content that adapts based on the user's context.

3. Prompts: These are user-controlled templates that streamline everyday interactions. Think of these as pre-defined ways to accomplish specific tasks with a server.

This three-pronged approach creates a clear separation between what the model controls, what the application controls, and what you as the user control, making interactions more intuitive and powerful across the board.

How MCP transforms enterprise automation without more integration debt

For CIOs, MCP represents a fundamental shift in how AI systems integrate with your technology stack. It enables smoother workflows across tools without requiring custom integrations or brittle API handoffs for each new connection.

What's even more exciting is how MCP is the foundation for building truly effective AI agents in your enterprise. This means your teams can focus on building valuable AI capabilities rather than maintaining the plumbing between systems. When a new tool enters your ecosystem, you don't need to rebuild all your AI integrations – just ensure it supports the MCP standard.

Let’s see how this works in practice.

At its core, an effective agent is what Anthropic describes as an "augmented LLM" – an AI model enhanced with capabilities to access external systems. The AI doesn't just process inputs and generate outputs; it can query retrieval systems, invoke tools, and maintain memory. MCP provides the standardized interface for all these external connections.

Core elements of MCP to build effective AI agents

Two key MCP capabilities make agent systems particularly powerful:

1. Composability: In MCP, the client-server relationship is a logical separation, not a physical one. This means any application can simultaneously be an MCP client and an MCP server. Picture a user asking your company's AI assistant (an MCP client) to research something. This assistant can then serve other specialized agents – maybe a research agent that connects to web search tools, a data analysis agent that processes findings, and a report-writing agent that formats everything. Each specialized agent can access the tools it needs through MCP servers, with context flowing naturally between all components.

2. Sampling: This underutilized but powerful MCP capability allows servers to request completions from the client. Typically, the LLM lives in the client application, making all the intelligence decisions. With sampling, an MCP server can ask the client's LLM to perform specific reasoning tasks. For example, a database MCP server might need to generate a complex query based on natural language. Rather than implementing its own LLM, it can ask the client application to handle that intelligence work. This creates a federation of intelligence, where specialized services can tap into the client's LLM capabilities when needed.

These capabilities transform how you can build agent systems in your organization. Instead of building monolithic AI applications that try to do everything, you can create orchestrator agents that delegate to specialized sub-agents, each with access to specific MCP servers. The orchestrator handles the high-level planning and coordination, while sub-agents handle domain-specific tasks with specialized tools.

This approach shifts your focus from building integrations to designing effective agent workflows – a much higher-value activity that delivers real business impact.

- Security-aware interoperability: Security remains a top concern with AI adoption. MCP addresses this by allowing AI agents to share only what's necessary, governed by your organization's security and access controls. Context sharing isn't an all-or-nothing proposition. With an MCP-based client, your HR assistant can access financial systems only when required, retrieving only the essential data each time.

- Scalable AI strategy: As organizations adopt more domain-specific AI agents across departments like HR, IT, and Finance, there's a risk of creating new silos. MCP paves a standardized way to integrate these specialized assistants so that they don't become disconnected tools but part of a composable, agentic ecosystem. When your HR assistant needs information from Finance to answer an employee's question about benefits, MCP provides a standardized way for conversing with respective HR AI agents that abstract the actual integration with the company knowledge base for HR data through tools, without duplicating all data across systems.

- Future-proofing AI investments: Perhaps most importantly, MCP represents a movement toward open collaboration between AI models and systems. This reduces vendor lock-in and enables orchestration across platforms, protecting your AI investments as the technology ecosystem evolves.

How an MCP registry aids self-evolving agentic systems

Apart from the above stated benefits of MCP, I'm also keenly following Anthropic’s developments on building the MCP registry API.

Why?

Because having an MCP registry would help improve the discoverability, publishing, and updation of MCP servers. The official MCP registry API would be a centralized metadata service designed to address the current fragmentation to dynamically discover new agent capabilities and access relevant data on the go.

This means that when an AI agent encounters a new task or interacts with an unfamiliar system, it can proactively seek out the necessary tools to accomplish the goal via the registry, without your team having to pre-engineer every possible interaction.

Mahesh from the Anthropic team, explains the role of the registry with an example of a coding agent that could query the registry to find and use an official Grafana server to check logs, even if it wasn't initially programmed to interact with Grafana.

As TCP/IP created the foundation for internet communication regardless of hardware or operating system, MCP is building the foundation for AI interoperability regardless of model provider or technology stack.

The path forward

MCP is more than just a technical solution. It's a strategic framework for the future of enterprise AI. As adoption accelerates across major platforms, we're witnessing the birth of a new standard that will fundamentally change how AI systems interact with our business environments.

For CIOs looking to future-proof their AI strategies, MCP offers a clear path forward, one where integrations become simpler, context flows naturally across systems, and AI capabilities compound rather than compete.

At Atomicwork, we're leveraging the MCP protocol to accelerate enterprise AI solutions that connect seamlessly with your existing tools while maintaining the security and context awareness your business demands. Talk to us if you want to learn more :)

Frequently asked questions

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

Faq answer paragraph

You may also like...